Introduction

ChatGPT (Generative Pre-trained Transformer) stands as a prominent Artificial Intelligence (AI) language model rooted in the transformer architecture. This neural network excels in processing sequential data, particularly text, through extensive exposure to vast text datasets. The training process involves pattern recognition and relationship establishment within the data, culminating in the generation of coherent language. Fine-tuning, complemented by human input and reinforcement learning from human feedback (RLHP), refines ChatGPT’s responses to various queries. ChatGPT’s most recent development is its GPT-4, the large language model (LLM) has been updated to understand, interpret and analyse images. These kind of developments indicate the evolution of AI in clinical settings.

The potential of GPT-4 in Medical Image Analysis

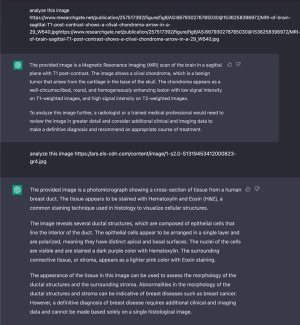

The potential impact on medical diagnostics remains significant. By leveraging image analysis, GPT-4 could enhance medical professionals’ diagnostic accuracy and speed, particularly in underserved regions. Evaluating GPT-4’s diagnostic prowess involved exposing it to diverse medical imaging modalities, from X-rays to Magnetic Resonance Imaging (MRI) and Optical coherence tomography (OCT) images. As demonstrated in Figure 1, GPT-4 can respond to prompts which specifically direct queries on interpreting medical images such as MRIs and OCTs.

Enhancing GPT-4’s image analysis proficiency necessitates further training on extensive medical image datasets to grasp nuanced patterns and correlations crucial for accurate diagnoses. While GPT-4 boasts a myriad of capabilities, it also harbours limitations, notably its reliance on training data patterns. This reliance implies potential performance disparities when faced with novel challenges or data misaligned with its training corpus. Addressing AI biases demands the incorporation of diverse datasets to fortify the model’s adaptability and mitigate predispositions in decision-making processes.

Challenges and Considerations in GPT-4 Utilisation

GPT-4’s potential limitations include contextual understanding gaps, leading to potential misconceptions and inaccuracies, especially in technical domains. Users must verify information independently due to potential unreliability. The opaque nature of AI models demands cautious interpretation of outputs to avoid errors. In dynamic fields like healthcare, outdated or erroneous responses may occur. Furthermore, privacy concerns arise from potential data collection practices. Competing LLMs like Google’s Gemini (formerly Bard) and Meta’s LlaMa 2 with image analysis capabilities signal a growing landscape.Future efforts should focus on equitable and accountable LLM development through open-source codes and oversight mechanisms.

Conclusion

While GPT-4 showcases remarkable advancements in automated medical image analysis, challenges such as contextual understanding, reliability, and privacy concerns persist. As the field evolves with new models like Gemini and LlaMa 2, prioritising accountability and equity through open-source practices is crucial for the future of AI-driven healthcare innovations. Would you use GPT-4 to interpret your medical images?