Large Language Models (LLMs) are gaining prevalence in healthcare. OpenAI, in collaboration with Microsoft Research, is exploring the use cases of such technology in healthcare and medical applications. They aim to understand the opportunities, limitations, and risks in this context. Google’s Language Model for Dialog Application or “LaMDA” (replaced by their Pre-training with Abstracted Langage Modeling “PaLM”) and OpenAI’s previous Generative Pretrained Transformer 3.5 (GPT-3.5) have also been under study for medical applications. Interestingly, these LLMs, although not specifically trained for healthcare, demonstrated competence in the medical field using open-source internet information.

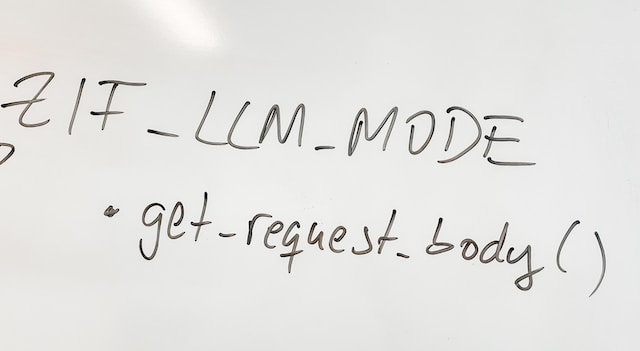

These LLMs have been used to develop the next tool in a physician’s pocket – a medical AI chatbot. By integrating OpenAI’s GPT-4 with medical expertise, a chatbot that engages users conversationally was created. Users initiate a session by entering a query or “prompt” in natural language, and GPT-4 responds, creating a human-like conversation. The system’s ability to maintain the context of an ongoing conversation enhances its usability and natural feel.

However, its responses are sensitive to the prompt’s wording, necessitating careful development and testing of prompts. GPT-4 can accurately answer definitive prompts, but it can also engage in complex interactions with prompts that lack a single correct answer. It provides error checking, identifying mistakes in its work and human-generated content.

GPT-4’s medical knowledge can serve tasks such as consultation, diagnosis, and education. It can read medical research material and engage in an informed discussion about it. However, like human reasoning, GPT-4 is fallible. It makes mistakes, but it can also identify them. The medical AI chatbot can write medical notes based on exchanges between providers and patients, even making sense of the subjective, objective, assessment, and plan (SOAP) format. It also includes billing codes as necessary. The chatbot can understand authorisation information, and prescriptions, that comply with Health Level Seven (HL7) and Fast Healthcare Interoperability Resources (FHIR) standards.

However, problems such as false responses or “hallucinations” pose dangers in a medical context. For instance, the AI chatbot created a medical note recording a body-mass index (BMI) without any related detail entered into the system. In another instance, the chatbot indicated no problems for the patient, but the clinician identified signs of medical complications.

While these tools can significantly enhance the consultation process and assist both the provider and patient, they are not without flaws and risks. The article speaks to a solution where the chatbot re-reads its information and it correctly identified these errors.

The article also highlights other improvements needed in the LLMs and chatbots. This is just the beginning of new possibilities and new risks. But, there is no denying that these tools hold the potential to optimise healthcare services.